读大二的我也要给 Chromium 浏览器内核贡献源码!- GSoC 2025 Proposal - Interaction to Next Paint (INP) subparts

读大二的我也要给 Chromium 浏览器内核贡献源码!- GSoC 2025 Proposal - Interaction to Next Paint (INP) subparts

gy最近有幸参与并入选了GSoC 2025,这篇文章是我给Chromium项目组的提案,针对Web Performance API,旨在优化浏览器内核对于INP性能指标的一些处理。

小红书📕👉【CQU大二 成功被选为Google GSoC贡献者 - g122622 | 小红书 - 你的生活指南】 😆 1zhytSvgu5XAp9W 😆 https://www.xiaohongshu.com/discovery/item/681e22300000000023011448?source=webshare&xhsshare=pc_web&xsec_token=ABqekbzfvoxIozXy36qJLYrAPjDUL5wByx4W95rEowRts=&xsec_source=pc_share

Overview & Backgrounds

Brief introduction about me

I’m a sophomore studying Computer Science at Chongqing University, China. I have been self-studying computer science since I was 9 years old and have nearly four years of experience researching the principles and design philosophies behind the Chromium project.

Recently, I happened to be studying Web performance standards. I was very excited when I came across this project on GSoC; it feels like it was meant to be!☺️

The present situation of INP

The newly added INP metric was incorporated into the CWV system last year (2024). This year, the Chromium team plans to assist developers in conducting an in-depth analysis of INP latency issues by introducing a reporting mechanism for “subparts” similar to that of the LCP metric. This approach will break down INP into sub-dimensions such as input delay, processing time, and presentation delay.

The Chromium team has already implemented preliminary measurements of these timings within the Event Timing API.

By modifying Chromium’s Event Timing API, fine-grained timestamps (including but not limited to timeStamp, processingStart, processingEnd) that originally existed only in the Renderer process are transmitted to the Browser process via Mojo IPC, and ultimately integrated into the UKM metric system and CrUX experimental dataset.

My understanding of how EventTiming works

This is my overall understanding of how EventTiming operates:

The EventTiming module is located in the third_party/blink/renderer/core/timing/ directory.

The EventTiming::TryCreate() is the entry point for event capturing and is primarily invoked in the following scenarios:

1 | |

The EventTiming adopts a non-intrusive design, making its destruction transparent to the main event dispatch flow. It automatically records the processing end time at destruction through C++’s RAII mechanism:

1 | |

By the way, this design approach also leverages the LIFO nature of the stack to handle nested events (such as an input event triggered within a pointer event), ensuring that Start and End calls are sequenced correctly.

The event dispatch process target->DispatchEvent(*pointer_event) will ultimately call EventTarget::FireEventListeners, which iterates over the obtained event_target vector table and invokes each event callback one by one.

- If the listener is a JavaScript function (

JSBasedEventListener), V8 is used to execute the JS callback here. - If the listener is a native C++ object (such as built-in event handlers), it directly calls the C++ method.

For the former case (JS listeners), in JSBasedEventListener::Invoke, triggering the JS callback is fully synchronous:

1 | |

InvokeInternal eventually executes the JS function through V8’s v8::Function::Call(). The C++ main thread waits synchronously here for the JS function to finish execution, during which it is blocked and unable to handle other tasks.

The procedure of passing INP data from the render process to the browser process:

This class diagram illustrates : The event handling process starting from WindowPerformance ➡️ Metric calculations performed by ResponsivenessMetrics ➡️ Step-by-step reporting through the framework client layer ➡️ Data aggregation by PageTimingMetricsSender ultimately ➡️ Cross-process transmission using mojom structures

(the direction of the arrows indicates the direction of calls/data flow)

Tasks

1.Update PageLoadMetricsSender mojo struct

Mojo is a cross-platform IPC framework that was born out of Chromium to facilitate intra-process and inter-process communication within Chromium. I am very familiar with its principles.

Change to report each part of each event timing (with non-0 interactionID), rather than just a single duration value

The general idea: I think we can refer to some fields in the following C++ structure in performance_event_timing.h to customize the PageLoadMetricsSender.😉

1 | |

Therefore, based on the above C++ code, the following metrics can be derived:

(This table clearly outlines each metric’s details. The metric I highlighted in bold is the most important 🚧)

| Metric Name | Calculation Formula | Description |

|---|---|---|

| Enqueue Delay(Input Delay) | enqueued_to_main_thread_time - creation_time |

Measures the delay from when an event is created to when it is added to the main thread queue. This can help identify if events are experiencing long waits before being processed. |

| Processing Delay | processing_start_time - enqueued_to_main_thread_time |

The time difference from when an event is added to the main thread queue to when processing begins. It reflects the impact of the main thread’s busyness on event handling. |

| Processing Duration | processing_end_time - processing_start_time |

The amount of time spent processing the event. This helps in understanding the efficiency of the event processing logic. |

| Render Preparation Time | render_start_time - processing_end_time |

The duration from the end of event processing to the start of rendering. It may include the time required for style calculations, layout, and other preparatory work before rendering. |

| Render Duration | commit_finish_time - render_start_time |

The actual time spent during the rendering process, from the start of rendering until the commit finishes. |

| Presentation Delay | presentation_time - commit_finish_time |

The delay from when the commit finishes to when it is finally presented to the user. This part can reveal bottlenecks in the composition and display processes. |

| Total Interaction to Presentation Time | N/A (Overall Time) | This is the core metric of INP (Interaction to Next Paint), measuring the total time from event creation (creation_time) to presentation to the user (presentation_time). It can be used to directly assess the user’s interaction experience. |

Below is original Code in components/page_load_metrics/common/page_load_metrics.mojom :

1 | |

Modified Code :

1 | |

I added InteractionSubpartTiming struct specifically to carry subpart timing data, while retaining existing UserInteractionLatency as a container struct, nesting new data through the subparts field

Update blink/Renderer side “plumbing” to migrate these values from window_performance.cc / responsiveness_metrics.cc to the PageTimingMetricsSender.

I am planning to move the logic into the function below, using my new mojo struct to delivery data.

1 | |

2.From Browser, update the UkmPageLoadMetricsObserver

- From Browser, update the UkmPageLoadMetricsObserver:

- Change PageLoadMetricsUpdateDispatcher::UpdatePageInputTiming and ResponsivenessMetricsNormalization helper to use this new mojo struct format in order to re-implement the existing UKM reporting of INP and NumInteractions.

- (There are other PageLoadMetricsObserver types which may require similar updates.)

For the changes in the UserInteractionLatency structure, I have provided the following scheme, implementing compatibility handling for the old and new data formats using the strategy pattern.😊

Original code :

1 | |

Modified code :

1 | |

My modification approach :

Complementary Test Cases:

(some objects and function such as MakeInteraction , HybridDataProcessing is defined by me. Due to limitations in article length, their definitions are omitted here 🥲)

1 | |

Affected Modules & Files

Blink renderer core

blink/renderer/core/timing/window_performance.ccblink/renderer/core/timing/responsiveness_metrics.cc

metrics_sender

components/page_load_metrics/renderer/page_timing_metrics_sender.cccomponents/page_load_metrics/common/page_load_metrics.mojom

UKM

chrome/browser/page_load_metrics/observers/core/ukm_page_load_metrics_observer.cccomponents/page_load_metrics/browser/responsiveness_metrics_normalization.cctools/metrics/ukm/ukm.xml

Development Process

| Phase | Weeks | Deliverables | Risk Control |

|---|---|---|---|

| Environment Setup & Prototype Verification | 2 | Chromium debugging environment, PoC for sub-dimension data collection | Accelerate compilation using GCP instances |

| Mojo Protocol Modification | 3 | Extended IPC interfaces, modifications to rendering process | Submit incremental CLs (Change Lists) and get code reviews in time |

| UKM Integration | 2 | New UKM metrics reporting, update ukm.xml | Synchronously update validation rules of ukm.xml |

| Test Suite Development | 2 | Web Platform Tests/WPT cases, unit test coverage | Utilize Chromium test framework |

| Performance Regression Testing | 1 | Benchmark reports, memory usage analysis | Use Telemetry performance testing framework |

| Documentation & Wrap-up | 2 | Design documentation, CrUX integration plan | Reserve buffer time |

My Contributions to Other Open Source Projects

VSCode

- Scrollbar for File menu is displaying over Open Recent by g122622 · Pull Request #236998 · microsoft/vscode

- Fix a common issue of the cascading system in VSCode. This issue is not limited to the “Open Recent” menu; it is a common problem for all submenus that include a vertical scrollbar. The root cause of this problem lies in the flawed management of cascading relationships in VSCode.

- This PR Fixes Issue #243134 : Chat Item Display Issue in VS Code Copilot When HTML Tags Start the Input by g122622 · Pull Request #243135 · microsoft/vscode

- Fix a bug related to chat components.

Bytenode

- https://github.com/microsoft/vscode/pull/243135

- Fix a bug related to the build artifacts.

About Me

I’m a sophomore studying Computer Science at Chongqing University, with a strong interest in browser internals. As a heavy GitHub user, I’m deeply familiar with collaborative workflows on the platform, including issue tracking, pull requests, and code reviews. I enjoy writing clean, maintainable code and have solid experience with design patterns and object-oriented programming.

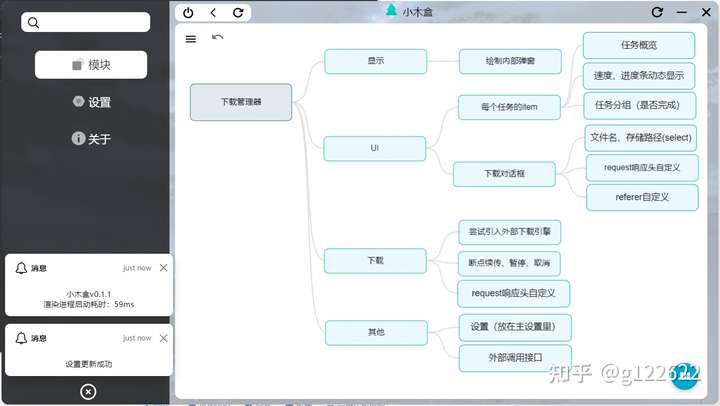

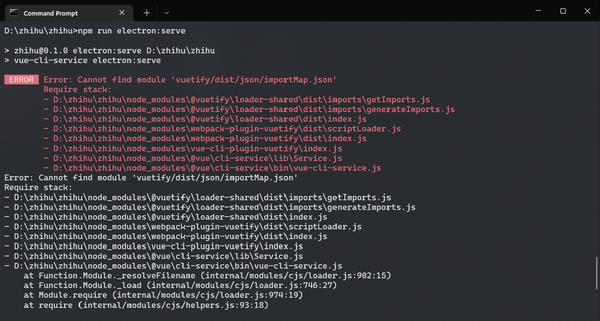

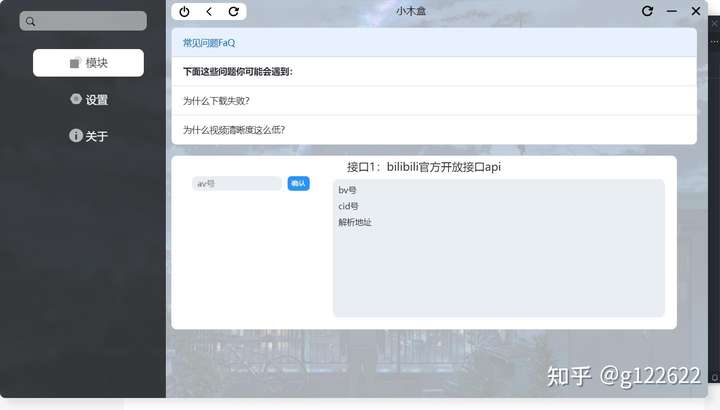

Recently, I’ve been diving into the Chromium codebase, focusing on its architecture and core components. Through hands-on exploration, I’ve gained practical knowledge of the Mojo IPC framework and learned to utilize Web Performance APIs to analyze and optimize rendering pipelines. To solidify my understanding, I’ve built small experimental modules that interact with Chromium’s internals, though these are still works in progress.

My journey started when I became curious about how browsers render web pages efficiently. Books like How Browsers Work and Chromium’s official documentation became my go-to resources for self-learning. I’ve also documented some of my explorations through technical blog posts (written in Chinese), including an analysis of Chromium’s multi-process architecture and a tutorial on measuring page load metrics using PerformanceObserver.

Extra Info

Name: Yi Guo

Email: 20230503@stu.cqu.edu.cn

Github: github.com/g122622

Time Zone: UTC+08:00 (China)

Location: Chongqing, China